AI is everywhere in music right now. But between the breathless headlines and doomsday predictions, what's actually useful for working artists? We sort signal from noise.

TL;DR

AI tools for stem separation, mastering, and mixing assistance are genuinely useful today. AI composition tools are impressive demos but not replacing human creativity. The real impact is democratisation — AI is making professional tools accessible to everyone, which is exactly what we champion.

The AI Music Tools That Actually Deliver

Let's separate the genuinely useful from the merely impressive. Stem separation (Demucs, LALAL.AI) works well enough for professional use. AI mastering (LANDR, eMastered) produces good results for independent releases. AI-assisted mixing tools (iZotope Neutron, Sonible smart:EQ) make intelligent suggestions that speed up workflow.

These tools share a common thread: they augment human creativity rather than replacing it. They handle technical tasks that require computation rather than artistic judgment. And they're most useful precisely because they're boring — they do tedious things faster, freeing you to focus on creative decisions.

The pattern is clear: AI excels at analysis and processing tasks in music production. It can identify frequency imbalances, suggest EQ curves, separate audio sources, and optimise loudness. What it cannot do — and this is crucial — is tell you whether a snare sound fits the emotional arc of your song. That remains stubbornly, beautifully human.

AI Composition: Impressive But Not the Threat You Think

Tools like Suno, Udio, and Google's MusicLM can generate complete songs from text prompts. And yes, some of the output is genuinely impressive at first listen. You can type 'melancholic indie folk song about rain in Manchester' and get something that sounds like a song within seconds.

But listen closer and the limitations appear. AI-generated music tends toward the generic — it can replicate genre conventions but struggles with the specific, weird, personal choices that make music memorable. It lacks the intentionality that comes from a human choosing to put exactly that chord in exactly that place because of exactly how it makes them feel.

The real concern isn't AI replacing artists — it's AI flooding platforms with mediocre content that drowns out human-made music. Spotify already has a problem with AI-generated tracks uploaded at industrial scale to farm streams. This is a platform and policy problem, not an artistic one.

What This Means for Independent Artists

For independent artists, AI is overwhelmingly positive in the short term. Tools that used to require expensive software or professional engineers are now accessible to anyone with a laptop. A bedroom producer in 2025 has AI-powered mastering, mixing assistance, stem separation, and audio repair tools that a professional studio didn't have ten years ago.

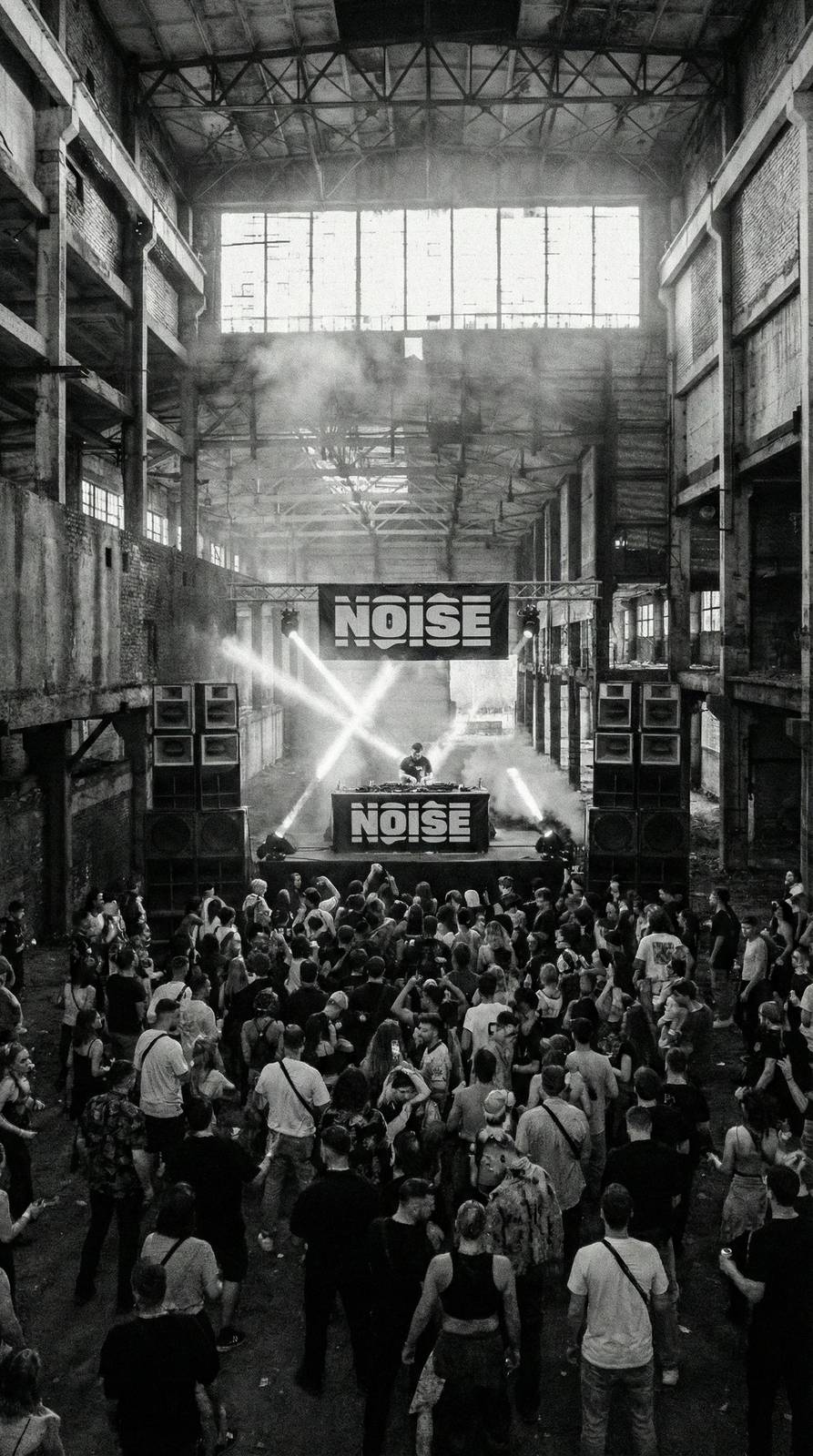

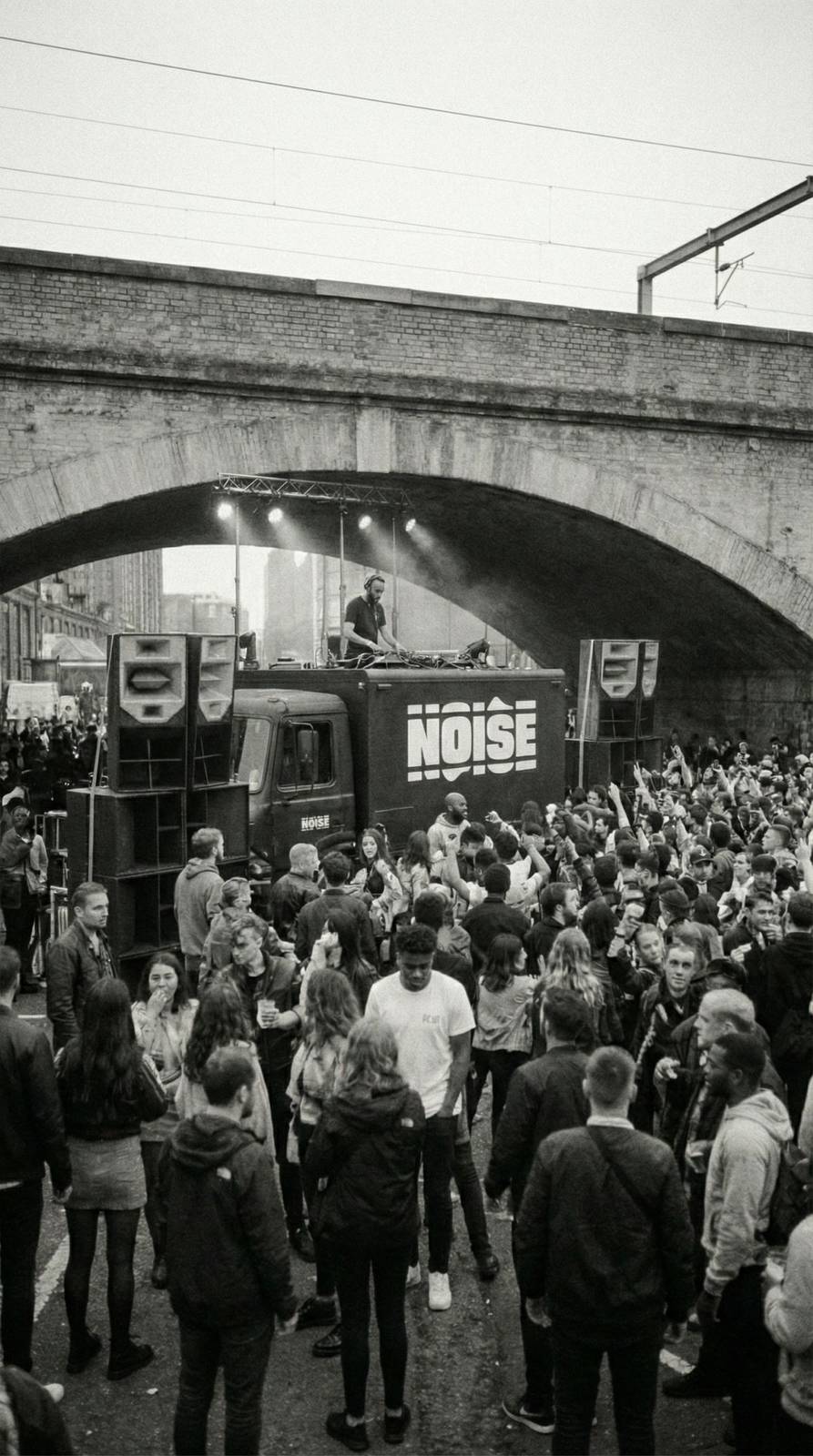

The democratisation angle is what excites us most at Noise. When a first-generation musician in a council flat can produce, mix, and master a release that competes sonically with major label output — and AI tools help make that possible — the industry gets more diverse, more interesting, and more representative of the world's actual musical talent.

But there's an important caveat: these tools work best when you understand the fundamentals. AI mastering works better when your mix is good. AI mixing suggestions are more useful when you understand what they're suggesting and why. Learning the craft remains essential — AI doesn't replace knowledge, it amplifies it.

The Copyright and Ethics Question Nobody's Solved

The legal landscape around AI and music is a genuine mess. AI models trained on copyrighted music without permission face lawsuits from major labels and publishers. The question of whether AI-generated music can be copyrighted (it currently can't, at least in the US) remains contentious. And the ethics of AI models that can replicate specific artists' voices are deeply troubling.

At Noise, our position is clear: AI tools that help artists create should be celebrated. AI tools that replicate artists without consent or compensation should be opposed. The distinction is between AI as a tool (like a synthesiser or a DAW) and AI as a replacement (like a counterfeit version of a human creator).

The music industry needs proactive regulation and fair compensation frameworks for AI training data. Artists whose work was used to train these models deserve recognition and payment. This isn't anti-technology — it's pro-artist, and the two aren't mutually exclusive.

Our Prediction: Where This Goes

Within five years, AI will be invisibly embedded in every DAW. Intelligent auto-tuning, context-aware effects, real-time arrangement suggestions, and one-click mastering will be standard features, not standalone products. The tools will disappear into the background and artists will forget they're using AI, the same way nobody thinks about the digital signal processing that makes their reverb plugin work.

The artists who thrive will be those who use AI to amplify their unique perspective rather than outsource their creativity. The human elements — emotion, intention, cultural context, lived experience — become more valuable as technical barriers fall. In a world where anyone can make a polished-sounding track, the differentiator is having something genuine to say.

And that's actually great news for the kind of artists Noise exists to champion: the authentic, the unconventional, the ones with stories that can't be algorithmically generated. The future belongs to artists who are irreplaceably human.